Importance of Data Processing

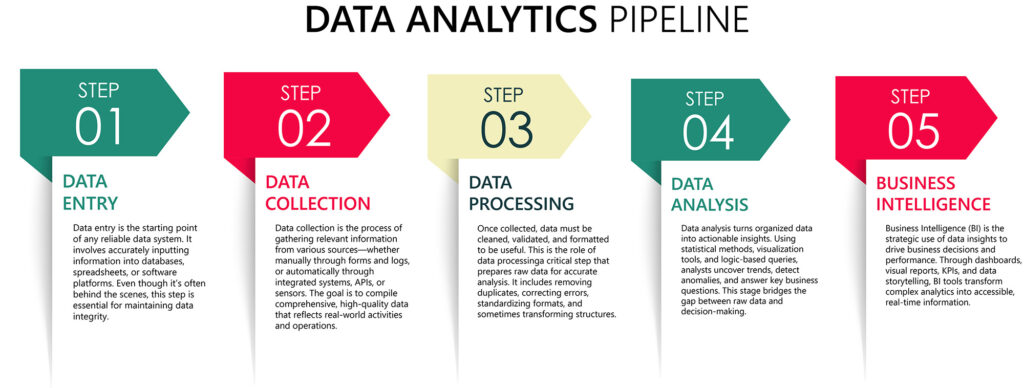

In the data lifecycle, collection gets a lot of attention and rightly so. But once the data is captured, what happens next is just as important, if not more. Enter data processing: the critical stage where raw information is shaped into usable, structured, and meaningful formats. For data professionals, this is where the real work begins. Clean, well-structured data doesn’t just happen, it’s the result of deliberate and disciplined processing.

What Is Data Processing?

Data processing is the transformation of raw data into a structured, clean, and analysis-ready format. It involves a variety of tasks including:

- Data cleaning (handling missing values, fixing errors)

- Data transformation (converting formats, standardizing fields)

- Integration (merging data from multiple sources)

- Aggregation (summarizing and grouping)

- Validation (ensuring consistency and accuracy)

It’s the bridge between raw input and actionable insight.

Why It Matters

While modern tools can automate parts of the processing pipeline, the logic and decision-making behind it require human expertise. When done right, data processing:

- Reduces errors and outliers that skew results

- Ensures data integrity across systems

- Prepares data for advanced analytics and machine learning

- Supports reproducibility and compliance

- Saves countless hours of manual cleanup later

Poor or inconsistent processing, on the other hand, introduces hidden risks. Misaligned joins, duplicate records, or mismatched data types can quietly degrade the reliability of insights.

Real-World Example: When Processing Is Ignored

Let’s say your marketing and sales departments each track leads but in separate systems. Marketing uses “Campaign Source” while sales logs “Lead Origin.” The fields are meant to match, but aren’t standardized. One system uses “Google Ads” while the other says “GA” or “googleads.com”. When it’s time to run a report on campaign performance, nothing aligns. You’re stuck in Excel trying to normalize values manually. Worse, your executive dashboard now shows inaccurate performance metrics.

This is a classic result of skipping or underestimating the processing layer. Had the data been processed via transformation rules or field mappings these inconsistencies would have been resolved automatically.

Data processing tends to live in the shadows of both data collection and data analysis and it’s largely due to perception. From the outside, collection feels like action, and analysis feels like insight. But processing? It’s usually viewed as just the “in-between” the mechanical part that people assume either happens automatically or should be someone else’s problem.

In many organizations, there’s also a disconnect between departments. The teams collecting data (e.g., customer service, marketing, operations) and the teams analyzing it (e.g., business intelligence, data science) often operate in silos. The processing step falls into a gap where no one owns the responsibility end-to-end. It’s either offloaded to whoever has the most time or done inconsistently across teams.

Additionally, data processing doesn’t always produce visible wins. A perfect join, a clean table, or a normalized data set doesn’t usually get attention unless it’s missing. As a result, the effort required to clean, transform, and validate data is underappreciated, even though it may account for 60-80% of a data professional’s time.

Finally, the rise of no-code and low-code tools sometimes gives decision-makers a false sense of simplicity. Just drag, drop, and visualize right? Not quite. These tools still rely on well-structured, logically processed data to work properly. Without it, dashboards become dangerous, because they look correct but tell the wrong story.

In short, data processing is overlooked because it lacks flash, clear ownership, and surface-level visibility but it’s exactly what makes trustworthy insights possible. It’s not glamorous, but it’s indispensable.